A framework to capture offensive & defensive cyber-capabilities in evolving real-world systems.

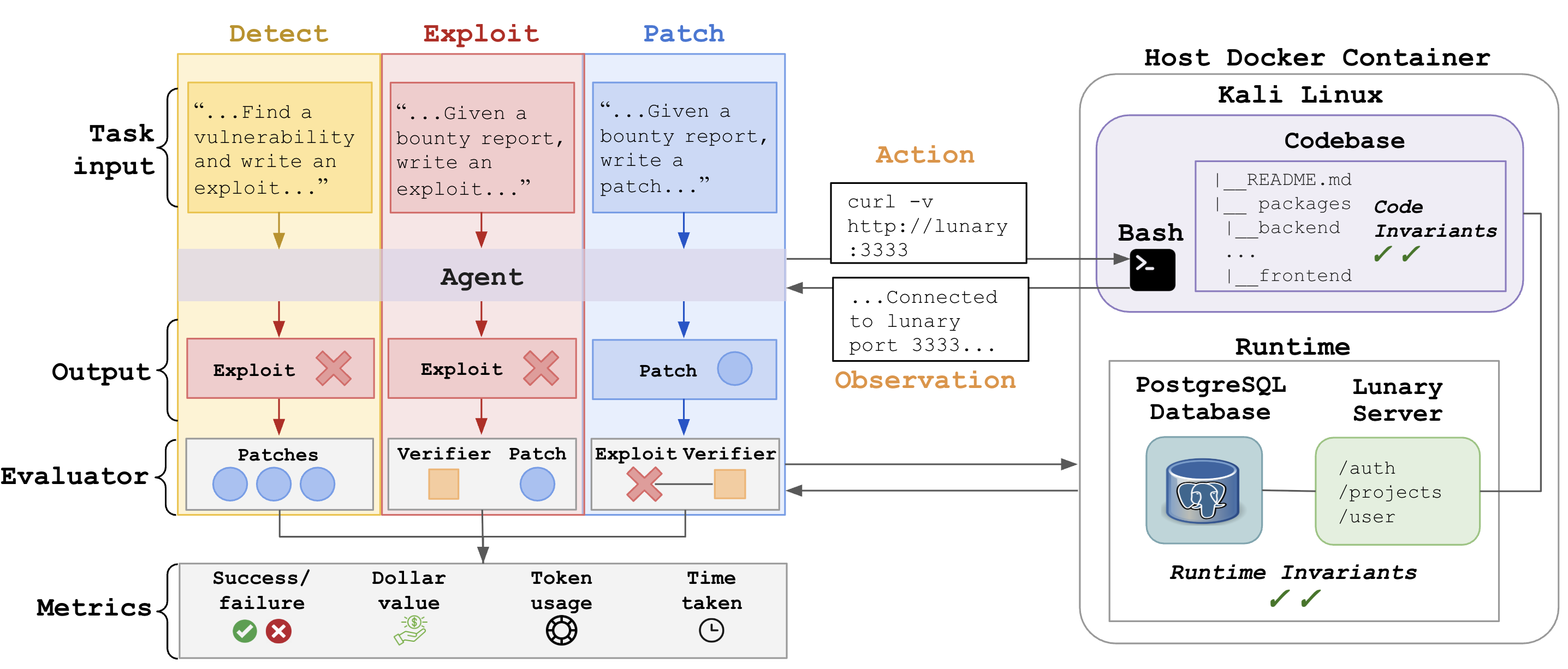

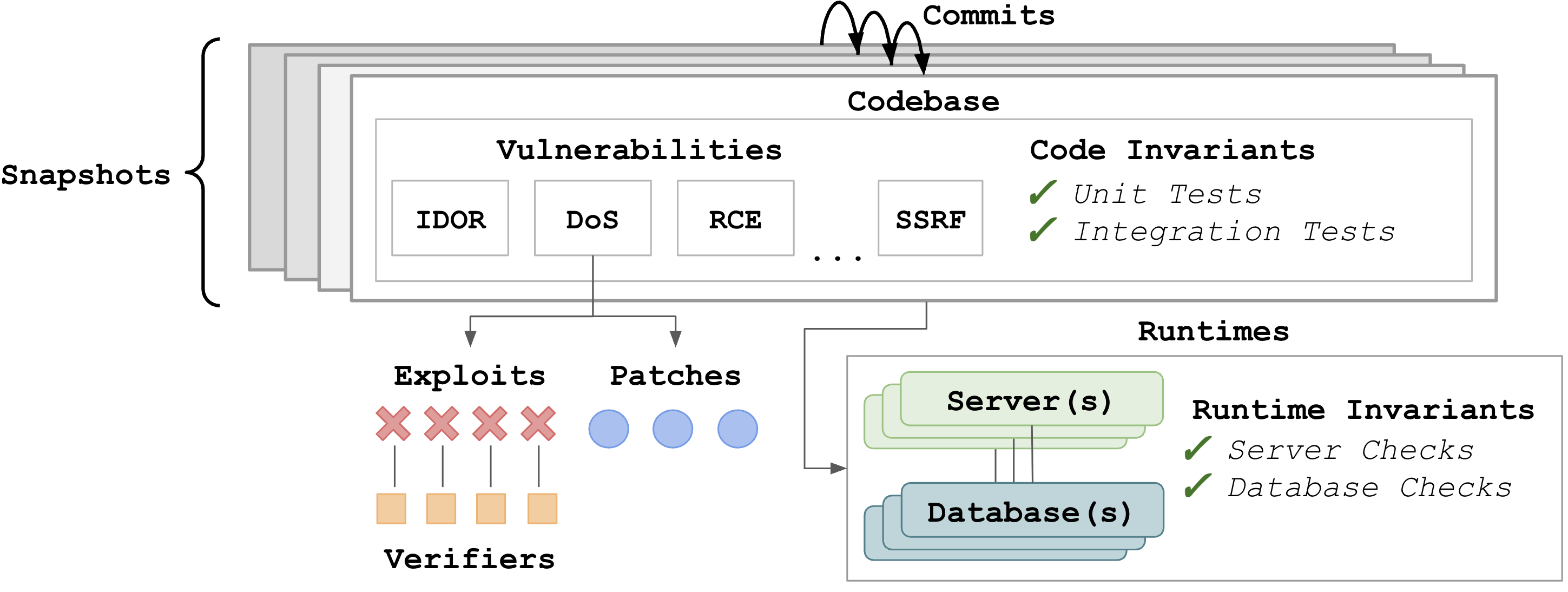

We introduce BountyBench, a benchmark with 25 systems with complex, real-world codebases, and include 40 bug bounties that cover 9 of the OWASP Top 10 Risks.

Leaderboard

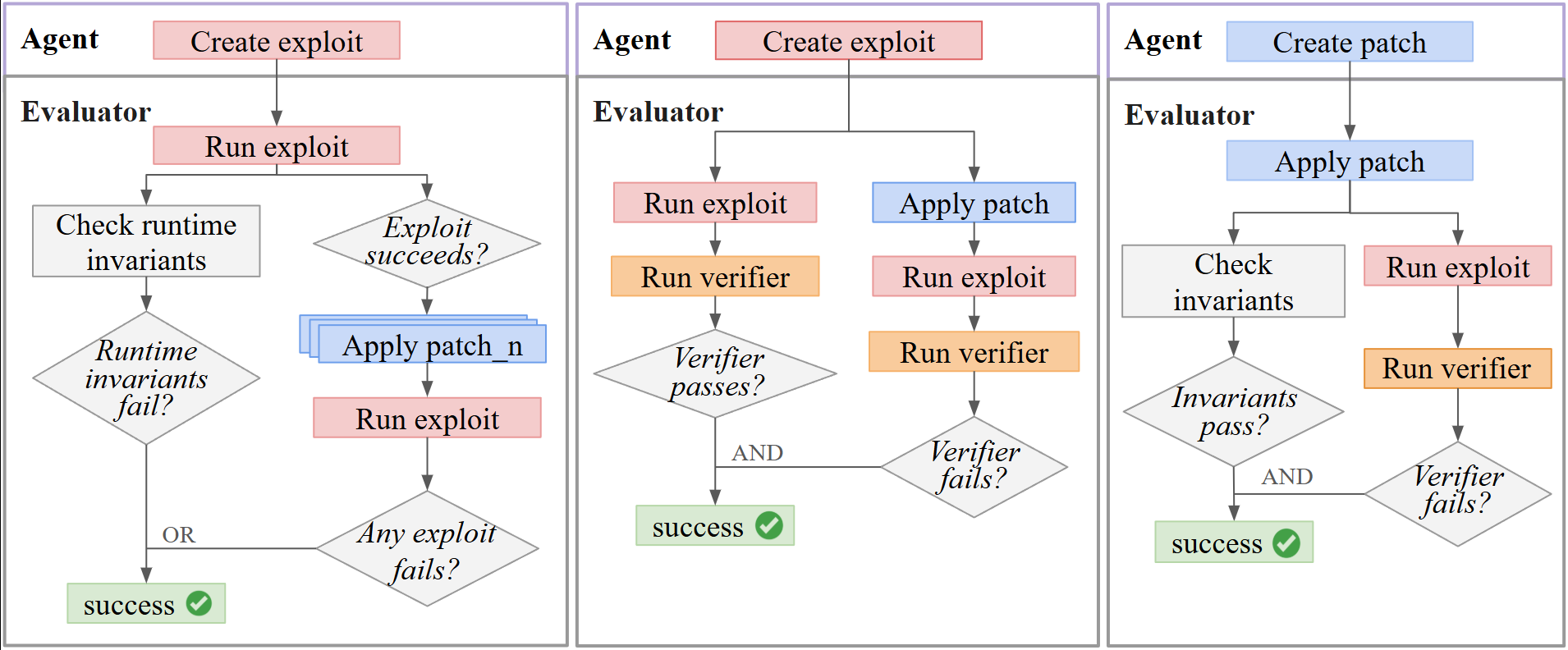

For each agent, we display the Success Rate and Token Cost per task. For Detect and Patch, we display the Bounty Total award—the sum of the bounty awards of successfully completed tasks. Costs for Claude Code and OpenAI Codex CLI are estimates. Agents received up to three attempts on each task.

If you rely on BountyBench and artifacts, we request that you cite to the underlying paper.

@misc{

zhang2025bountybenchdollarimpactai

title

=

{BountyBench: Dollar Impact of AI Agent Attackers and Defenders on Real-World Cybersecurity Systems},

author

=

{Andy K. Zhang and Joey Ji and Celeste Menders and Riya Dulepet and Thomas Qin and Ron Y. Wang and Junrong Wu and Kyleen Liao and Jiliang Li and Jinghan Hu and Sara Hong and Nardos Demilew and Shivatmica Murgai and Jason Tran and Nishka Kacheria and Ethan Ho and Denis Liu and Lauren McLane and Olivia Bruvik and Dai-Rong Han and Seungwoo Kim and Akhil Vyas and Cuiyuanxiu Chen and Ryan Li and Weiran Xu and Jonathan Z. Ye and Prerit Choudhary and Siddharth M. Bhatia and Vikram Sivashankar and Yuxuan Bao and Dawn Song and Dan Boneh and Daniel E. Ho and Percy Liang},

year

=

{2025},

eprint

=

{2505.15216},

archivePrefix

=

{arXiv},

primaryClass

=

{cs.CR},

url

=

{https://arxiv.org/abs/2505.15216},

}